Transforming Change

As IT infrastructures become more complex and methods of working evolve, how does IT Operations Management (ITOM) keep up with the demands of customers, their development counterparts, and IT Leadership? This article explores the digital transformation journey of one organization as it struggled to keep up with new methodologies and a development-driven business model. At the core was an outmoded Change Management process that desperately needed to be modernized for Agile Development and a burgeoning DevOps practice. Through incremental and iterative organizational change, the operations group was able to design a path to accommodate advanced development models, while establishing valuable governance around the development lifecycle.

Background

Classic Waterfall Development Model

It is nearly impossible to talk about IT without including some level of discussion around development. Indeed, as a long-term member of the IT Operations Management side of the business, the author has tried with no success. This article is not specifically about software and application development. But in order to properly discuss operations, it is important to acknowledge how development has changed over the past twenty years. Historically, development took place in what we called the ‘waterfall’ method. That is, developers and project managers spent some amount of time at the beginning of a project and gathered all the requirements, the goals and expectations of the customer, and then went away for another amount of

Then There Was DevOps

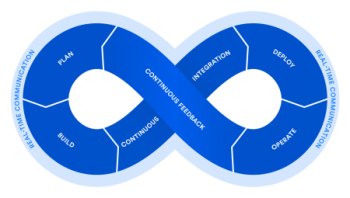

Of course, developers are developers after all. So, even as Agile Development grew, even more radical ways of working began to emerge. Indeed, by the time the term DevOps began to enter our lexicon, many IT organizations had already begun adopting the model. The primary focus of DevOps is to eliminate the traditional silos (and constant bickering) between developers and operators, creating a seamless pipeline from code (Dev) to product (Ops). This has primarily

been accomplished using various combinations of automation tools for code check-in (Continuous Integration), testing and staging (Continuous Delivery), and deployment to production (Continuous Deployment). This is what has come to be known as CI/CD.

All this advancement in application and software development has been embraced by many SMBs, and has even begun to normalize in larger, risk-averse enterprises. But it has not come without some pitfalls. And as we continue down the path of maximum efficiency, we would be wise to consider the issues that accompany such increased change velocity.

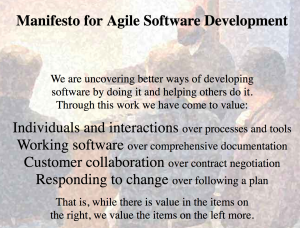

We Love Agile

The roots of Agile Development lie in manufacturing. Lean, Kanban, whatever names we give it, the idea is to have a faster, more adaptive, and theoretically more stable process for deploying new products. In IT, this began (and continues) in application development. There are a lot of things to like about this methodology. It allows both developers and customers to frequently ‘check-in’ on progress, see real results sooner, and adapt to changing requirements and goals with relative ease. But it also means there are more changes happening, often with less oversight. With the ideal of “Individuals…over Processes…”, it is easier for an organization to slide off the path of rigorous Change Control, convinced that velocity will produce the right outcomes. To be sure, processes should never get in the way. The goal of building and following a process is to create a repeatable way of working that should enable, not constrain, efficiency and effectiveness.

Is DevOps the Right Move?

Issues arising from process contention only magnify as we apply the DevOps model. In addition, visibility into the entire development lifecycle decreases. In a fully functioning CI/CD environment, once any piece of code is checked in, it will be deployed to production without further human intervention, assuming it passes all its automated tests. While this gives great flexibility to developers to quickly deploy new features and functionality, it quite frankly scares the pants off system administrators. Lack of control coupled with an inability to predict when and where new functionality will appear in production can be nothing short of an operational nightmare.

All these concerns, however, should not necessarily discourage an organization from embracing this way of working. It is exceptionally efficient, and because most changes are small, they can cut down on consumer anxiety from larger, wholesale modifications where the user experience is fundamentally changed, even though when strung together all the small changes eventually add up to these larger, more transformative enhancements. And that helps our value stream remain stable, while improving the customer journey over time.

DevOps in the Real World

The author had the opportunity to work on a process improvement initiative in a highly regulated, publicly traded insurance organization. While the project itself was focused primarily on the strategic, tactical, and operational practices of the IT organization, the development side of the house was very active and influential. More importantly, development had the attention of the business. This presented a unique opportunity to discuss and even help shape the digital strategy around future development and deployment practices.

First Impressions

Initially, there were a lot of skeptical actors, concerned that enhanced processes would stifle the velocity and efficiency of a relatively unchecked development cycle. And, as with many organizations, the development models in use were as varied as the personalities behind them. There had been many recent shake-ups within the IT organization, and for Application Development, this meant a restructuring into multiple, autonomous teams, each left to decide its own practice. The organization supported everything from mainframe computing to highly diverse web applications, and this resulted in wildly different processes to get from product request to product reality. Some teams were still operating within a waterfall model, while others had fully embraced Agile. The User Experience (UX) team was pushing very hard to move to CI/CD. With the promise of all that model has for consumer web applications, this was no surprise.

But there was an underlying concern that was not immediately voiced; however, it became clear quickly enough as each team laid out its practices and understanding of process. Lots of code was being released with bugs. Because there was little transparency between operations and development, this was being kept relatively well-hidden, as each development team had convinced its customers to report bugs directly to them, rather than creating operational Incidents when new code caused issues. And the development teams were very responsive. Moreover, these issues did not pose any substantial concern to the business overall, so there was little incentive to change.

And herein lies one of the problems with adopting higher velocity development models. While the business is relatively pleased with the improvement in velocity, operations becomes saddled with handling issues released into production with little control or oversight. After sitting down with several of the senior IT directors, some ideas around maturity and rate of organizational change began to emerge. The most crucial of these regarded the necessity and burden of the age-old ITSM albatross: the CAB.

Change Enablement

We spoke a lot about the CAB, which they held weekly with a pretty large crowd for the size of the IT organization (usually close to 20 people in attendance). Most of the development teams were in the ‘volun-told’ camp, attending only because it was absolutely necessary. Each time, they would question the value and necessity of the meeting, and after listening in on one occasion, it was clear that the meeting was primarily a rubber stamp mill for change requests. Part of the issue seemed to be that the development release process was mostly a black-box, so IT leadership was completely out of the loop on how each team built, tested, and prepared their code for deployment. Still, the development teams were pushing for even more automation and less oversight. Mercifully, the CIO was not interested in eliminating what was essentially the only remaining bastion of control and visibility that IT Operations had over the deployment cycle.

So, how was an organization with such a development-centric business model going to improve process and practice stability while enabling efficient, high velocity deployment models? One of the most innovative, yet under-the-radar, changes made in ITIL 4 (the latest edition of the framework), just so happens to provide some very valuable guidance on the Change Management (now Change Enablement) practice. With about as little fanfare as one can imagine, the newest publication practically eliminates the idea of a centralized, all-encompassing change authority (the CAB) in favor of developing authorities which better align with various release and deployment models. That is, multiple, dynamic change authorities which can provide assessment, authorization, and scheduling support without the need for rigid meeting schedules and extensive (and often not very valuable) discussions regarding every change.

But first, we had to take a step back. Keeping in mind the level of regulatory rigor the organization was required to maintain, simply eliminating the CAB was not an immediate option. So, we set off down the path that would eventually lead to this transformative approach by recalibrating the existing Change Enablement practice. The most obvious starting point was the fact that Standard Change—a formidable tool for enabling high velocity, well-understood deployments with a substantially lower bar for oversight—had been eliminated. This had been done mostly because many teams had begun to abuse the process, making high risk changes to the environment with no formal authorization in place. So, the problem was one of policy and governance.

When designed properly, and followed correctly, Standard Change can reduce the number of so-called Normal Changes by a large amount. After some review,

Release and Deployment

As with many organizations, release, deployment, and change had become conflated, and that meant that no one was able to exert the appropriate amount of control on any of these vital practices. By simply mapping each of them out as individual processes within the larger development lifecycle, everyone became aware of how they differ and where they intersect. And we were able to build the appropriate dependencies, providing great flexibility to development, while establishing meaningful control for operations.

This was simply the first step toward a true, high-velocity development environment. With expanded visibility and control, IT leadership would be able to recognize the level of success each change had. And because the development teams were now more accountable for the code being released, they became more apt to follow test procedures, which reduced failed deployments (i.e., buggy code in production).

Set Course for Continuous Development

The next step for the organization was to create a path from this new, more transparent, efficient, and successful set of practices to a true CI/CD model. Because of the increased transparency between development and operations (a critical goal of DevOps), IT leadership could quantify success rates, making it possible to more effectively analyze risk of change to each of their applications. This allowed them to create a policy around what areas were candidates for CI/CD, and what areas were too risky (or failing too often) to consider. The calculation for this sort of decision is highly subjective for each organization, but from a success rate standpoint, they decided on a 99% successful change rate over a six-month period for an application before even entertaining the idea of moving to CI/CD.

Test Frequently or Suffer the Consequences

One additional advantage that this organization had was that the CISO had taken a firm stance on security testing for all applications. Security is frequently overlooked until the last minute, and it becomes a severe bottleneck for many development efforts because all work stops just before the project is declared

This provided a huge step forward regarding Continuous Delivery. With automated security testing already in place, the development teams would need to simply mimic this model, working with their software testers and quality assurance teams, automated gateways could be developed and provide the next step in the journey.

Automated testing is one of the more contentious concepts in the CI/CD model. Many operations folks—the author included—have taken a long time to psychologically accept that well-crafted automated testing tools are superior to human eyes. Once we can get over that hump, it seems obvious, but being steeped in decades of human intervention when it comes to code releases, that first step is a doozy. But that is the key to the final lock. And it is critically important that the automated testing of code is extremely rigorous. Not only should development teams be involved, but operational support teams, end users (when possible), and even leadership in some cases, must have a seat at the table when developing this part of a DevOps infrastructure. Removing the human element is effective only if the replacement toolset is actually better.

The Mother Lode

Once the Change Enablement practice was shored up, each development team had documented its internal release and deployment practices, and IT leadership was on board with the enhancements, it was time for some measurements. And as reality set in, development teams reluctantly admitted that they were nowhere near ready for CI/CD. And this was not a failure. This was just the point in their journey where they realized there was more work to be done. But rather than just an edict from on high that there would be no DevOps today, leadership was able to quantitatively show each team where they were coming up short, and that their plans exceeded the organization’s risk appetite. This has led to more honest and valuable discussions, as well as meaningful changes within each development team’s processes to reach their goals of automation and Continuous Deployment. And it has created a more collaborative atmosphere with operational staff, which is another major benefit of moving toward a DevOps model. While the final vision has not yet been achieved, the success that they have enjoyed with these newly enhanced processes has been essential in the transformation of the entire organization.

Parting Shot

As complexity and demands continue to mount for IT Operations Management, the need for new methods of working will inevitably form. Controlling the narrative and taking the time to make iterative improvements with an eye on the business’ vision will result in better adoption, and it can create a more collaborative environment for the entire IT Organization. Development methodologies, such as Agile and DevOps are here to stay. And that is a good thing. These ideas can be applied to many of our practices throughout the enterprise. It is important to remember that no single methodology, framework, or standard is going to fit every situation. We must be willing to accept that it will take time to adapt successfully, and more importantly, that we won’t always end up where we thought we would.